News

In House Hackathon

APRIL decied to have an in house hackathon to work on the Cybersees project. This involved bringing everyone up to speed on the state of the project, and 3 days of intense work to reach our goal. On day 1 we did code cleanup and basic testing in the lab. Day 2 we had all sorts of things happen from a broken drive train to a successful lawnmower pattern run!! We left off by packing for the next day, and on day 3 we sent a team out into the field to visit Smiths Creek Landfill in St. Clair county, MI. There we had the successful completetion of a lawnmower pattern. We learned a lot on the limitations of our system and are back at home and going back to the drawing board on how to scale our solutions. All in all it was a great week of hacking for the APRIL team!

CSEG Volleyball

Members of the APRIL lab got out on a Saturday afternoon to play in a friendly volleyball tournament with other computer science graduate students. After a few games we were getting our bump, set, kill in good shape! A fun time was had by all, and we enjoyed several games out in the sand!

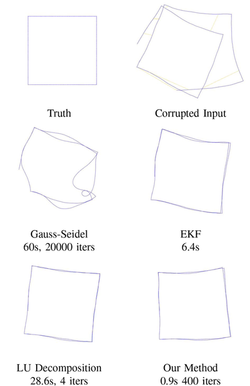

Google Scholar Classic Papers

Our paper Fast iterative alignment of pose graphs with poor initial estimates has been included in Google Scholar’s collection Classic Papers: Articles that Have Stood the Test of Time. To be named a Classic Paper, an article must be among the ten most-cited works in its area published ten years earlier. Check out our paper and the rest of the Robotics Top-Ten here!

Robot Race

May 1st was a great day for racing! Two daring teams came out to accept the challenge, and one walked away Robot Race Champion. The task was:

Given an APRIL robot, write a controller that followed a human carring a saber through a tricky obstacle course setup by our very own APRIL officials. The total time allotted was 2 hours, and each team had 3 people on it.

The competition was intense, each group had their eye on the prize. At half-way the teams were starting to see their robots move, and by race time it was anyones game. Each team had 3 timed trials through the course. In the end the Corso Lab Team were our winners. The goal was to get people out working together on robots, and seeing our robots in action was exciting. All our competitors did a great job! Stay tuned for when we will host our next robot race!

AprilTag Update

The C version of the AprilTag library has been updated with some important enhancements and improvements, and some basic support for OpenCV integration. Get it here: apriltag-2016-12-01.tgz

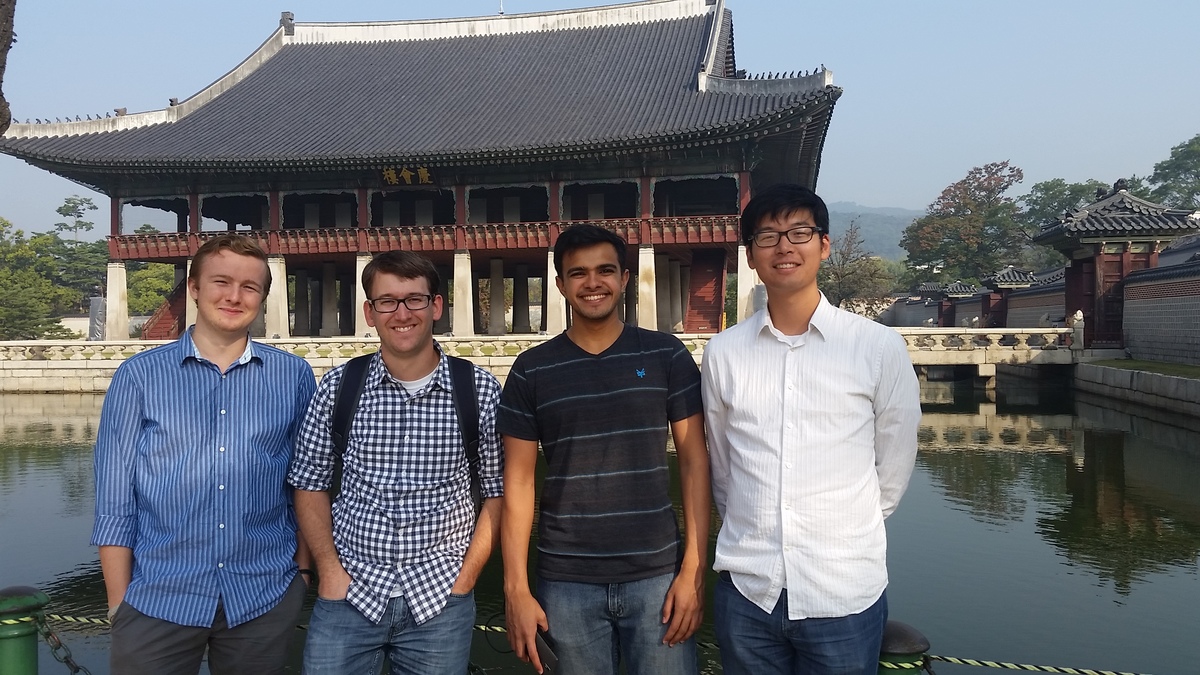

APRIL Lab Members present papers at IROS2016

APRIL Lab members John Wang, Rob Goeddel, Carl Kershaw, and Dhanvin Mehta attended IROS2016 in Deajeon, South Korea. Presented papers were:

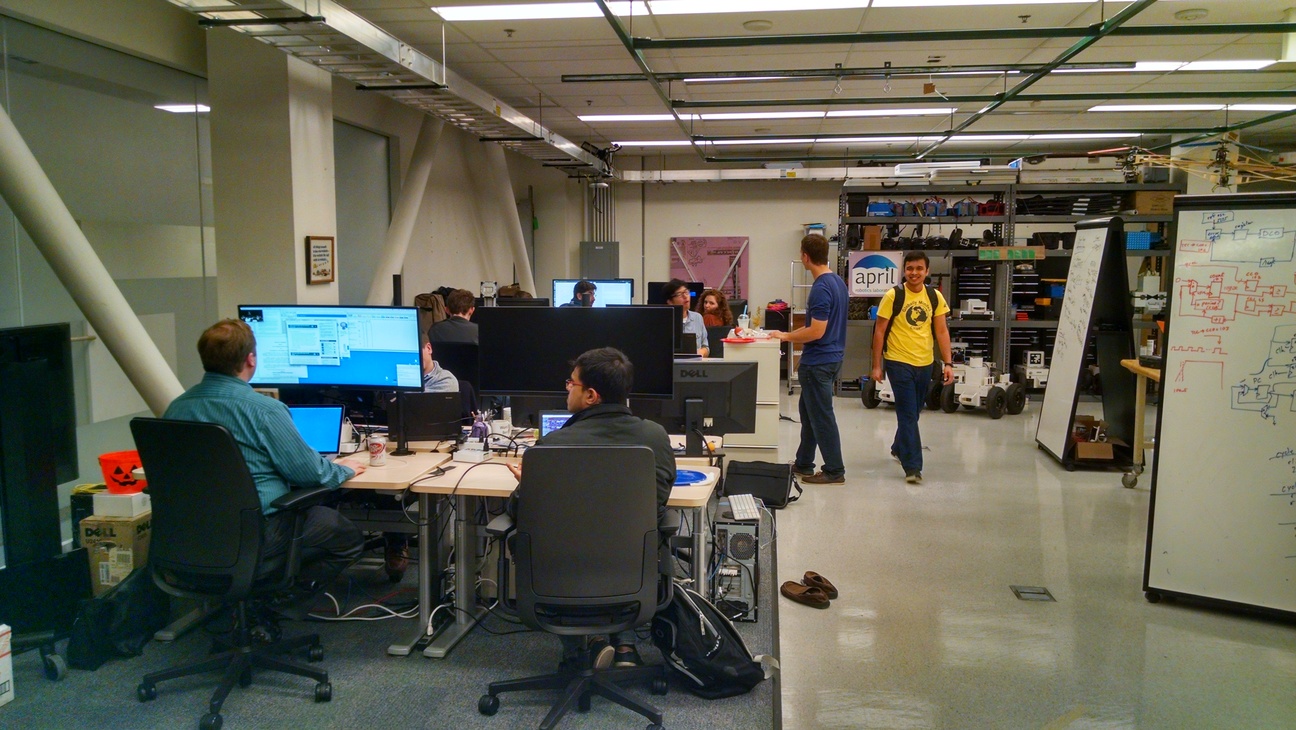

Lab Renovation

Michigan Robotics is growing, a fact that is all the more evident when you visit our bustling lab space in the Bob and Betty Beyster Building on the University of Michigan’s North Campus. When the Laboratory for Progress recently moved in, joining the APRIL Lab and the Intelligent Robotics Lab, we used it as an opportunity to rethink our lab space. Come visit us, now that we’re all moved in!

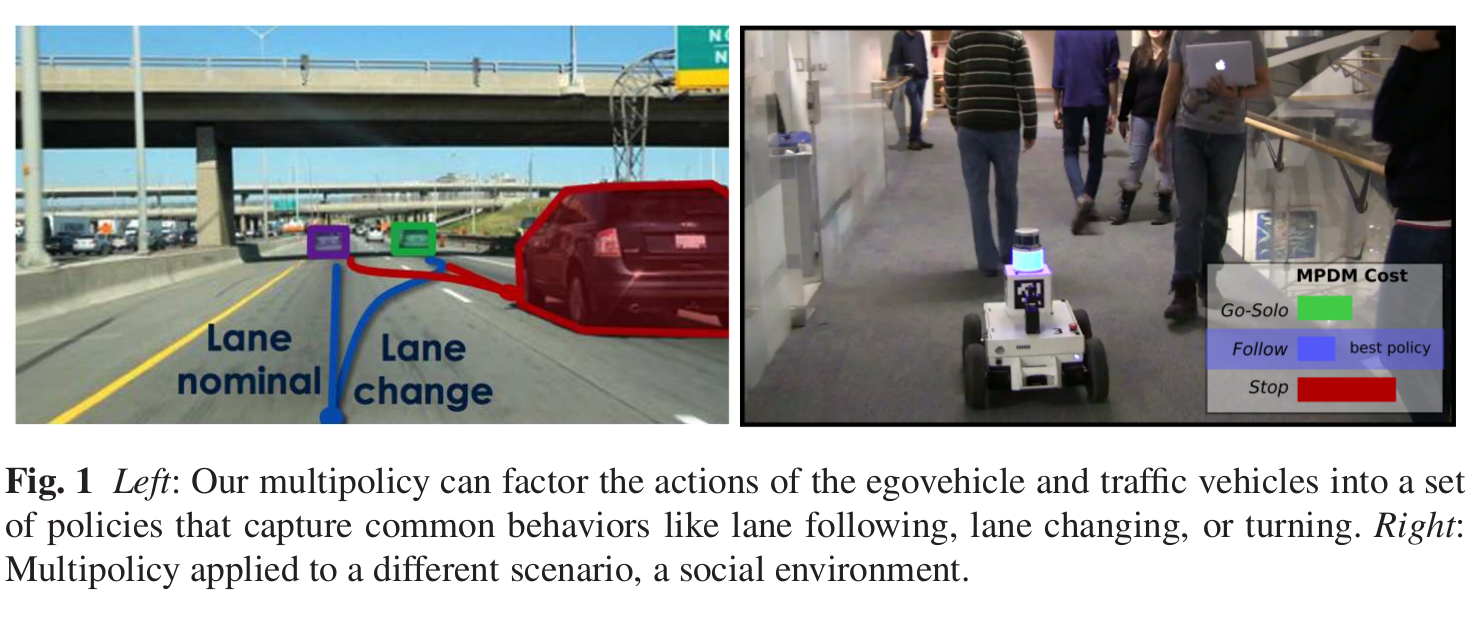

Multi-Policy Decision-Making in the News

Multi-Policy Decision-Making (MPDM) is a novel approach we have developed to navigation in dynamic multi-agent environments. Rather than specifying the trajectory of the robot explicitly, the planning process selects one of a set of closed-loop behaviors whose utility can be predicted through forward simulations that capture the complex interactions between agents. We have published several papers on this topic (1, 2, 3) and this work has recently been highlighted by IEEE Spectrum. We have more exciting developments on the way for MPDM, so stay tuned!